INSTINCT: Instance-Level Interaction Architecture for Query-Based Collaborative Perception

Image credit: Unsplash

Image credit: UnsplashAbstract

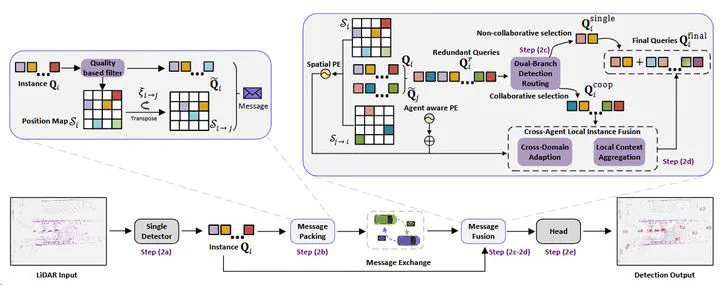

Collaborative perception systems overcome single-vehicle limitations in long-range detection and occlusion scenarios by integrating multi-agent sensory data, improving accuracy and safety. However, frequent cooperative interactions and real-time requirements impose stringent bandwidth constraints. Previous works proves that query-based instance-level interaction reduces bandwidth demands and manual priors, however, LiDAR-focused implementations in collaborative perception remain underdeveloped, with performance still trailing state-of-the-art approaches. To bridge this gap, we propose INSTINCT (instance-level interaction architecture), a novel collaborative perception framework featuring three core components: 1. a quality-aware filtering mechanism for high-quality instance feature selection; 2. a dual-branch detection routing scheme to decouple collaboration-irrelevant and collaboration-relevant instances; 3. a Cross Agent Local Instance Fusion module to aggregate local hybrid instance features. Additionally, we enhance the ground truth (GT) sampling technique to facilitate training with diverse hybrid instance features. Extensive experiments across multiple datasets demonstrate that INSTINCT achieves superior performance. Specifically, our method achieves an improvement in accuracy 13.23%/32.24% in DAIR-V2X and V2V4Real while reducing the communication bandwidth to 1/281 and 1/264 compared to state-of-the-art methods. The code is available at https://github.com/CrazyShout/INSTINCT.

Type

Publication

IEEE International Conference on Computer Vision

Click the Cite button above to demo the feature to enable visitors to import publication metadata into their reference management software.

Create your slides in Markdown - click the Slides button to check out the example.

Add the publication’s full text or supplementary notes here. You can use rich formatting such as including code, math, and images.